How do you get started with your program evaluation?

This is Part 2 of the Program Evaluation Whitepaper series. The previous whitepaper covered what you should consider before starting a program evaluation. Please read that paper before continuing on.

What have you already determined?

For this evaluation, you should have determined the following:

- Main or over-arching question(s)

- Main audience

- The primary reason for conducting this evaluation

- The resources (staff, expertise, budget, etc.) available to carry out this evaluation

- The types of data sources available

- The timeline or due date

- The decisions to be made based on the results

- Most importantly, a commitment to use the information found

Going through the question-and-answer process, you have probably already determined whether you need an evaluation that informs your audience about the program as it happens (formative) or whether you need an evaluation that looks at the completed program (summative).

Couple this with the resources available to you, and you probably have a pretty good idea of your scope. The fewer the resources, the more narrow and less involved the evaluation process will be.

After you have determined the scope of your evaluation, you can get started writing questions.

What is the big picture?

The tricky part about writing questions is keeping your questions tied to your evaluation purpose and not allowing yourself to get sidetracked with extraneous questions. Your over-arching question is never really answered by itself in an evaluation; it is answered by compiling the answers (evidence) from a number of sub-questions.

It is important to get your sub-questions (i.e., your evaluation questions) right.

How do you frame questions?

When thinking about your questions, reflect on them in terms of the considerations above, along with whether or not you are looking to evaluate process, impact, or outcomes. Process evaluations help you see how a program outcome or impact was achieved, while impact and outcome evaluations look at the effectiveness of the program in producing change. Within the body of research on evaluation, you will find conflicting definitions of impact and outcome measurement—mostly about which actually comes first. The bottom line is they both look at change that occurs on the beneficiary’s part. Within the nonprofit sector, it will mostly be referred to as outcome measurement (we will refer to outcomes in this whitepaper as well), and these measurements will focus on changes that come from program involvement. These changes are shifts in knowledge, attitudes, skills, and aspirations (KASA), as well as long-term behavioral changes.

Most funders are concerned with nonprofits reporting outcomes.

Funders want to know, “Are you making a difference?” Outcome data answer that question to keep the focus there. Process questions may look like, “What problems were encountered with the delivery of services?” or “What do clients consider to be the strengths of the program?”; however, outcomes questions ask end-goal questions, such as “Did the program succeed in helping people change?” and “Was the program more successful with certain demographics than others?”

With the focus on actual change, outcomes questions should be asking things related to shifts in KASA that can be attributed to the participant’s involvement in the program. Furthermore, questions should be asking about things related to the ultimate goal of a particular program.

The below chart provides a summary of types of evaluation questions. When discussing methods, quantitative refers to numeric information pulled from surveys, records, etc., and qualitative refers to more subjective, open responses that capture themes. A combination of the two is generally referred to as a ‘mixed methods’ approach.

|

Evaluation Questions |

What They Measure |

Why They Are Useful |

Methods |

|

Process |

How well the program is working Is it reaching the intended people? |

Tells how well the plans developed are working Identifies any problems that occur in reaching the target population early in the process Allows adjustments to be made before the problems become severe |

Quantitative Qualitative Mixed |

|

Outcome |

Helps to measure immediate changes brought about by the program Helps assess changes in KASA (knowledge, attitude, skills, and aspirations) Measures changes in behaviors

|

Allows for program modification in terms of materials, resource shifting, etc. Tells whether or not programs are moving in the right direction Demonstrates whether or not (or the degree to which) the program achieved its goal |

Quantitative Qualitative Mixed

|

Refer to your logic model for writing your outcomes evaluation questions. Because you should have already listed outcome types (short term, intermediate term, and long term) and when they occur, your outcomes questions should be reflective of those listed in the logic model progression. Outcome questions should be written in a way that reflect the change you want to see, the direction of change intended (increase, improve, decrease, etc.), and who it is intended towards. For example:

- Improved school attendance by youth (13–17 years)

- Improved academic achievement by youth (13–17 years)

When you are ready to start writing your questions, consider these criteria:

- Are the questions related to your core program?

- Is it within your control to influence them?

- Are they realistic and attainable?

- Are they achievable within funding and reporting periods?

- Are they written as change statements: Are they asking things that can increase, decrease, or stay the same?

- Are there any big gaps in the progression of impacts?

There are some really great sources out there that can help walk you through the process of outcome measurement. One of the best sources for developing this plan is Strengthening Nonprofits’ Measuring Outcomes (see sources for direct link to document). There is no need to reinvent the wheel when it comes to resources—we can just point you to the right sources and give you some key ideas to think about.

How do you measure change?

In order to actually measure change, you need criteria for data collection. Using an example from above, improved school attendance, we only see what we desire to change, but not how it will be measured. Without a way to measure it, we do not know if we are actually making a difference; we only know that it is our desired change.

When referring to impact and outcomes, measurements come in the form of performance indicators. Indicators are measures that can be seen, heard, counted, or reported using some type of data collection method. To measure your performance, you need a baseline (starting point) and a target (goal). For each impact or outcome statement (evaluation question), you should establish baseline indicators and target indicators in order to effectively evaluate your performance.

Considerations when writing your indicators:

- Are they specific?

- Are they measurable?

- Are they attainable?

- Are they realistic?

The great thing is that there are a number of groups who have already put together lists of indicators by sector. Be sure to check out Perform Well, The Urban Institute’s Outcomes Indicator Project, the Foundation Center’s TRASI, and United Way’s Toolfind.

What do you do now?

- Write your outcome statements: who, what, and how.

- Who will be impacted by the program?

- What will change as a result of the program?

- How will it change?

- Write your criteria (performance indicators) for measuring your outcomes.

- For each impact or outcome statement, you generally need 1–3 indicators (depending on complexity)

- Map them. Map out your envisioned outcomes sequence (again, referring to your logic model), along with your intended indicators.

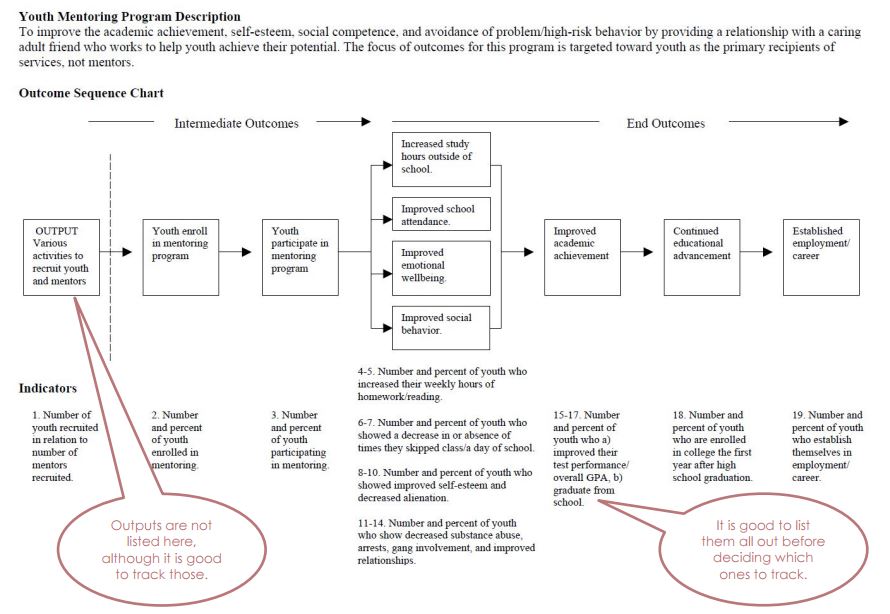

- The example on the next page is taken directly from the Urban Institute’s Outcomes Indicator Project for a Youth Mentorship program.

Once you have done this, you will be able to select the type of evaluation plan appropriate for your needs.

Sources

From “A Framework to Link Evaluation Questions to Program Outcomes,” Journal of Extension, URL: http://www.joe.org/joe/2009june/tt2.php

From “Developing A Plan For Outcome Measurement,” Strengthening Nonprofits, URL: http://strengtheningnonprofits.org/resources/e-learning/online/outcomemeasurement/Print.aspx

From “Measuring outcomes,” Strengthening Nonprofits, URL: http://www.strengtheningnonprofits.org/resources/guidebooks/MeasuringOutcomes.pdf

From “Outcomes Indicators Project”, URL: http://www.urban.org/policy-centers/cross-center-initiatives/performance-management-measurement/projects/nonprofit-organizations/projects-focused-nonprofit-organizations/outcome-indicators-project

From “Candidate Outcome Indicators: Youth Mentoring Program,” Urban Institute and the Center for What Works, URL: http://www.urban.org/sites/default/files/youth_mentoring.pdf